💻 AI for Software Engineering

This page highlights ongoing and past research efforts connecting AI, formal methods, programming languages and software engineering at the reasoning and learning research group @ Georgia Tech led by Professor Vijay Ganesh.

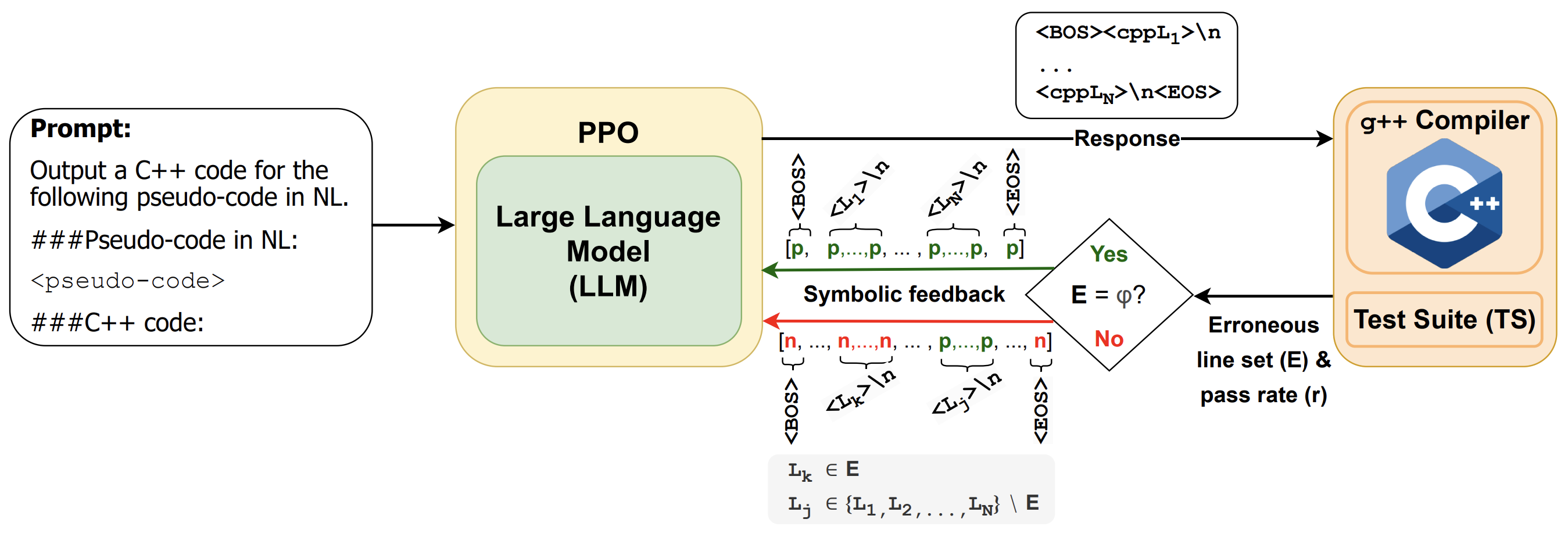

RLSF: Fine-tuning LLMs via Symbolic Feedback (2025)

Authors: Piyush Jha1, Prithwish Jana1, Pranavkrishna Suresh1, Arnav Arora1, Vijay Ganesh1

Affiliation: 1Georgia Institute of Technology, USA

TL;DR: A novel fine-tuning paradigm where symbolic reasoning tools (e.g., solvers, provers, RDKit) provide token-level feedback to LLMs, achieving large accuracy gains on code synthesis, chemistry, and math tasks while remaining far smaller than closed-source models.

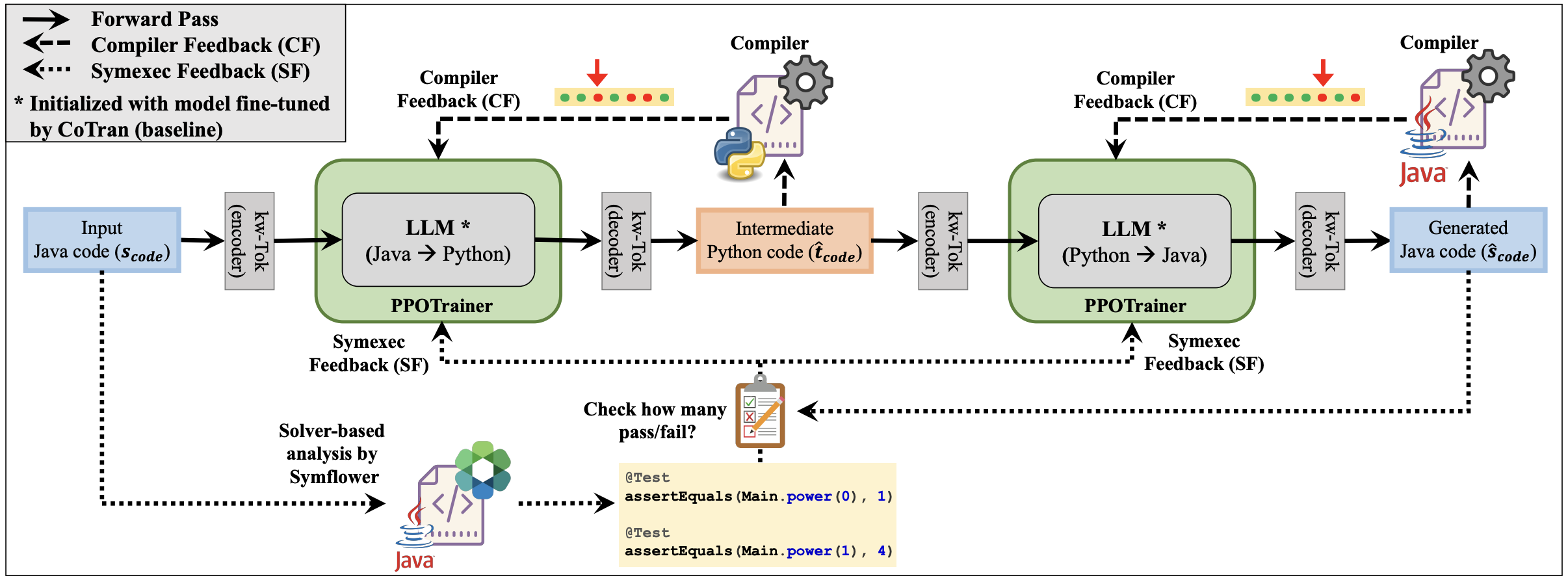

CoTran: An LLM-based Code Translator using Reinforcement Learning with Feedback from Compiler and Symbolic Execution (2024)

Authors: Prithwish Jana1, Piyush Jha1, Haoyang Ju2, Gautham Kishore3, Aryan Mahajan4, Vijay Ganesh1

Affiliations: 1Georgia Institute of Technology, USA | 2University of Toronto, Canada | 3UC San Diego, USA | 4Columbia University, USA

TL;DR: Fine-tunes LLMs for end-to-end code translation using compiler and symbolic-execution feedback to improve compilability and functional equivalence.

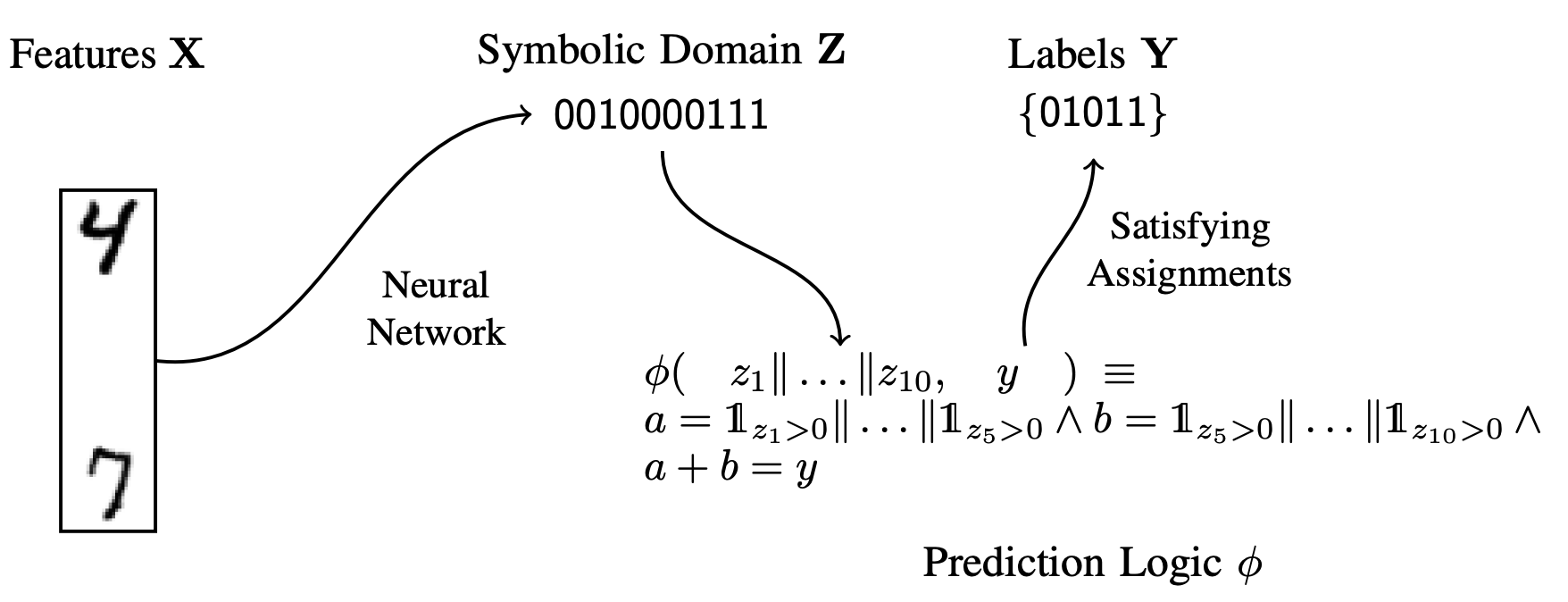

Grounding Neural Inference with Satisfiability Modulo Theories (2023)

Authors: Zifan Wang1, Saranya Vijayakumar2, Kaiji Lu3, Vijay Ganesh4, Somesh Jha5, Matt Fredriskon2

Affiliations: 1Center for AI Safety | 2Carnegie Mellon University, USA | 3Pinterest Inc. | 4Georgia Institute of Technology, USA | 5University of Wisconsin, Madison, USA

TL;DR: Introduces SMTLayer, enabling neural networks to enforce logical constraints directly during both forward and backward passes without requiring the solver to be differentiable.

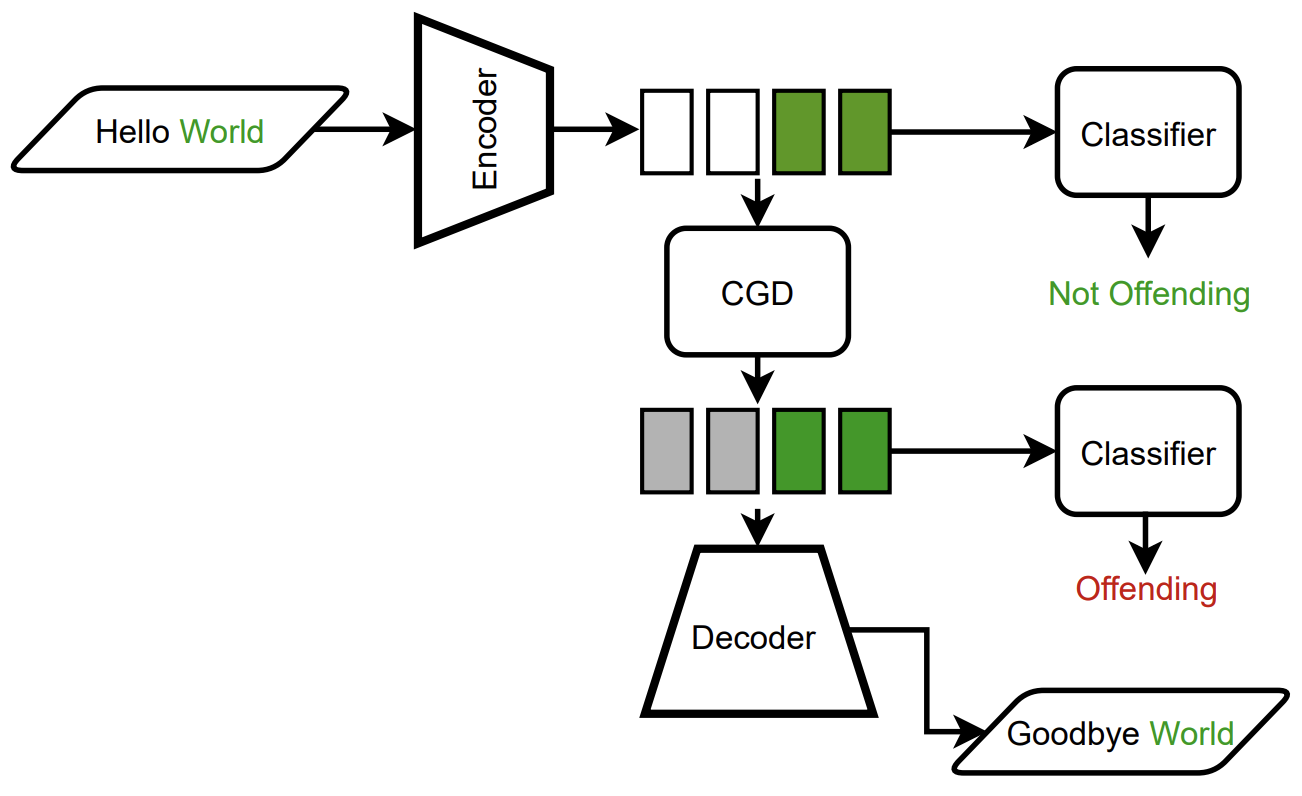

CGDTest: A Constrained Gradient Descent Algorithm for Testing Neural Networks (2023)

Authors: Vineel Nagisetty1, Laura Graves1, Guanting Pan1, Piyush Jha1, Vijay Ganesh1

Affiliation: 1University of Waterloo, Canada

TL;DR: Applies constrained gradient descent to systematically test deep networks, uncovering adversarial robustness and fairness issues more effectively than existing testing tools.

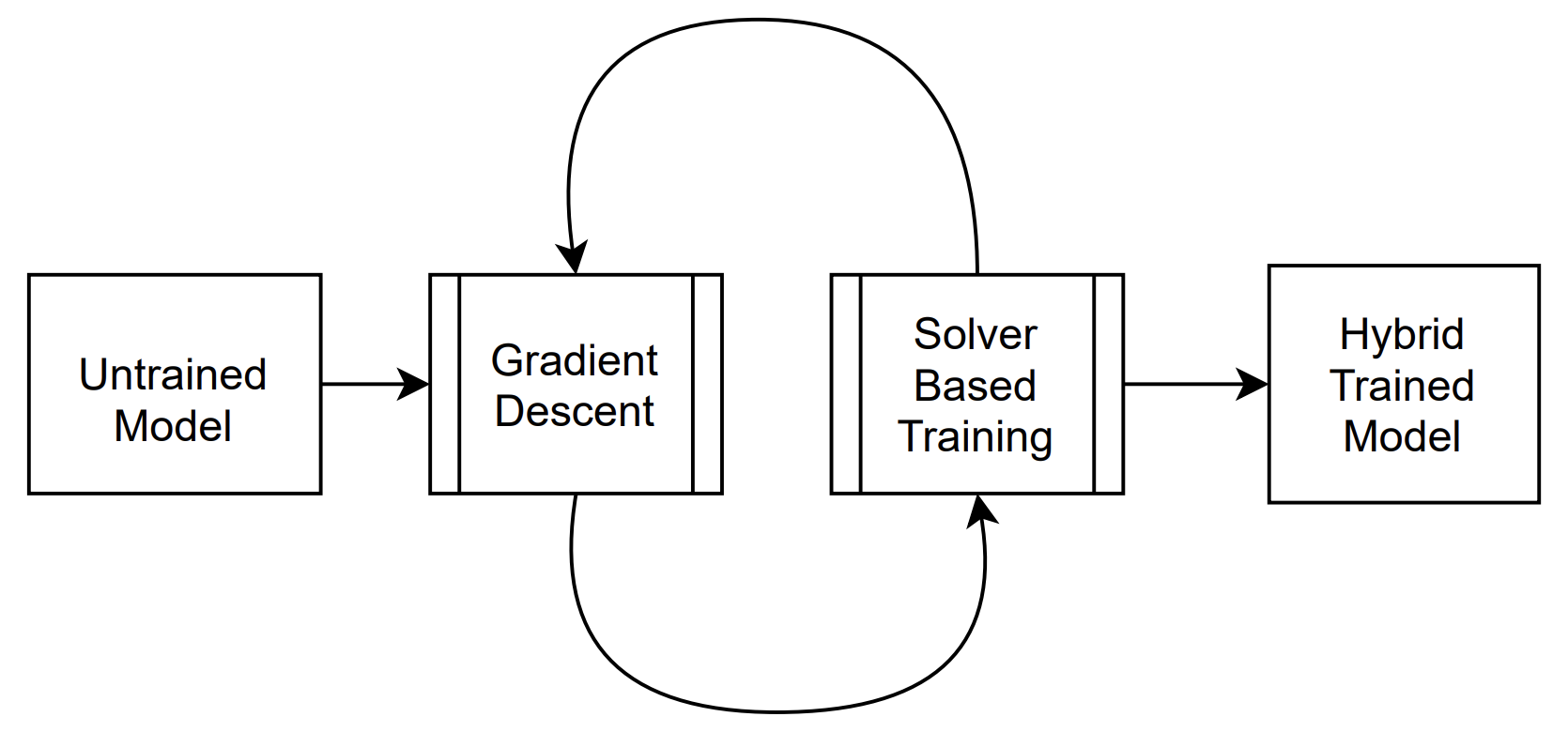

A Solver + Gradient Descent Training Algorithm for Deep Neural Networks (2022)

Authors: Dhananjay Ashok1, Vineel Nagisetty2, Christopher Srinivasa2, Vijay Ganesh3

Affiliations: 1University of Toronto, Canada | 2Borealis AI | 3University of Waterloo, Canada

TL;DR: Combines gradient descent with MILP solver steps to escape poor local minima and accelerate convergence on classification and regression tasks.

Amnesiac Machine Learning (2021)

Authors: Laura Graves1, Vineel Nagisetty1, Vijay Ganesh1

Affiliation: 1University of Waterloo, Canada

TL;DR: Develops unlearning techniques that remove the influence of specific training data to satisfy privacy laws like GDPR while maintaining model performance.

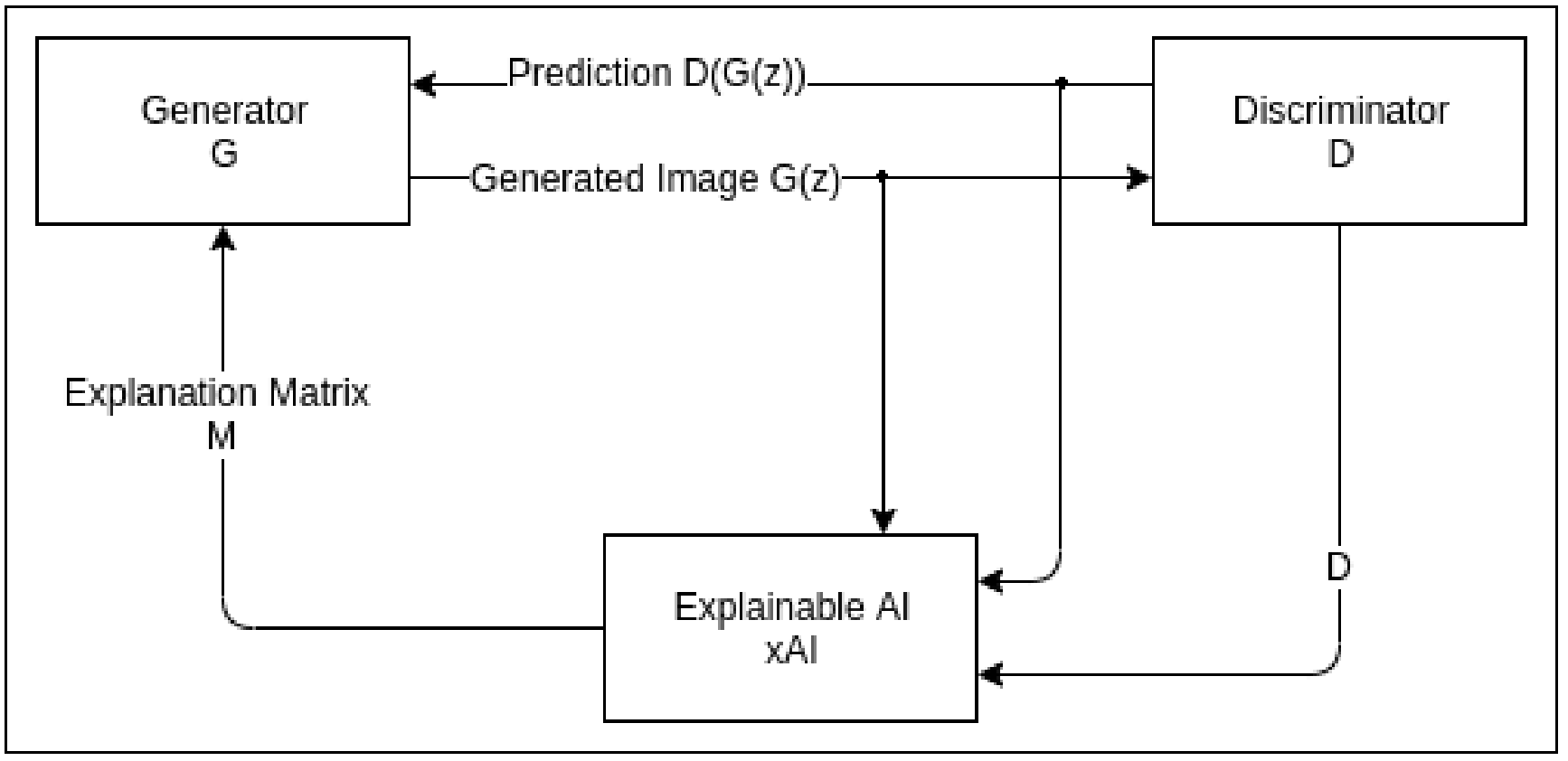

xAI-GAN: Enhancing Generative Adversarial Networks via Explainable AI Systems (2021)

Authors: Vineel Nagisetty1, Laura Graves1, Joseph Scott1, Vijay Ganesh1

Affiliation: 1University of Waterloo, Canada

TL;DR: Leverages explainability to guide GAN training, improving both the interpretability and quality of generated outputs.

Published in AAAI-21 (Explainable Agency Workshop) arXiv Project Page GitHub